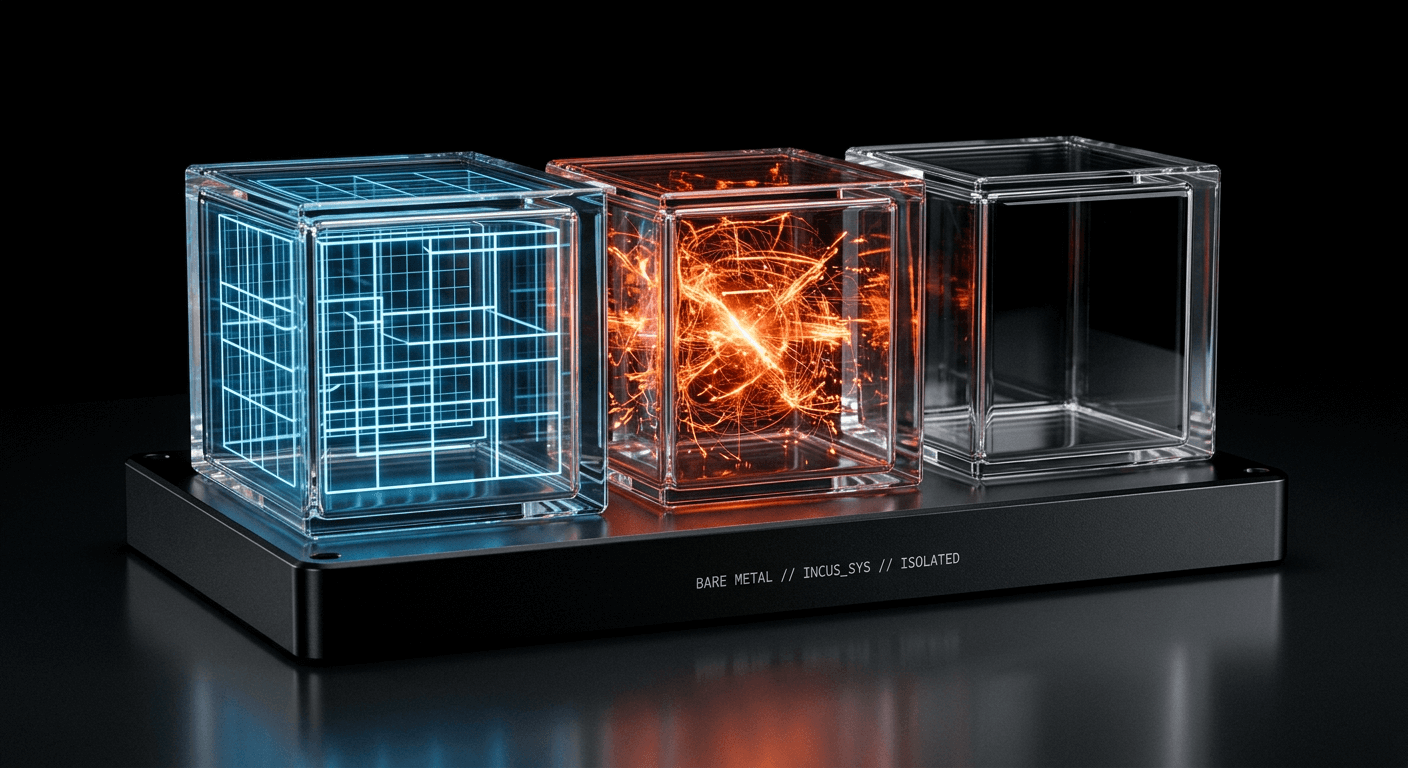

Isolating AI Coding Agents on Bare Metal: Incus, Podman, and a $50/Month Server

How Singular uses system containers, rootless Podman, and declarative YAML configs to run isolated, multi-project AI agent environments on a single $50/month bare-metal server.